Investigation Shows Instagram Facilitates Child Exploitation

How Instagram’s algorithm creates a network for pedophiles

AUG 03, 2023

Investigation Shows Instagram Facilitates Child Exploitation

How Instagram’s algorithm creates a network for pedophiles

A recent investigation conducted by The Wall Street Journal and researchers from Stanford University and the University of Massachusetts Amherst revealed that Instagram “connects and promotes” a network of accounts dedicated to the commission and purchase of underage sex content.

Most parents have at least a vague awareness of the dangers present on social media platforms but it’s still easy to think that the really scary stuff is happening in the darkest corners of the internet.

Given that Instagram is one of the most mainstream social networks—25% of parents use Instagram themselves—it is especially shocking that such horrifying dangers are happening there.

How Does it Work?

Some accounts on Instagram sell child sex material through perverse menus (similar to a restaurant’s) and offer specific acts for commission. Abhorrent menus include prices for videos of children harming themselves or engaging in sexual acts with humans or animals, and even offer in-person meet-ups for the right price.

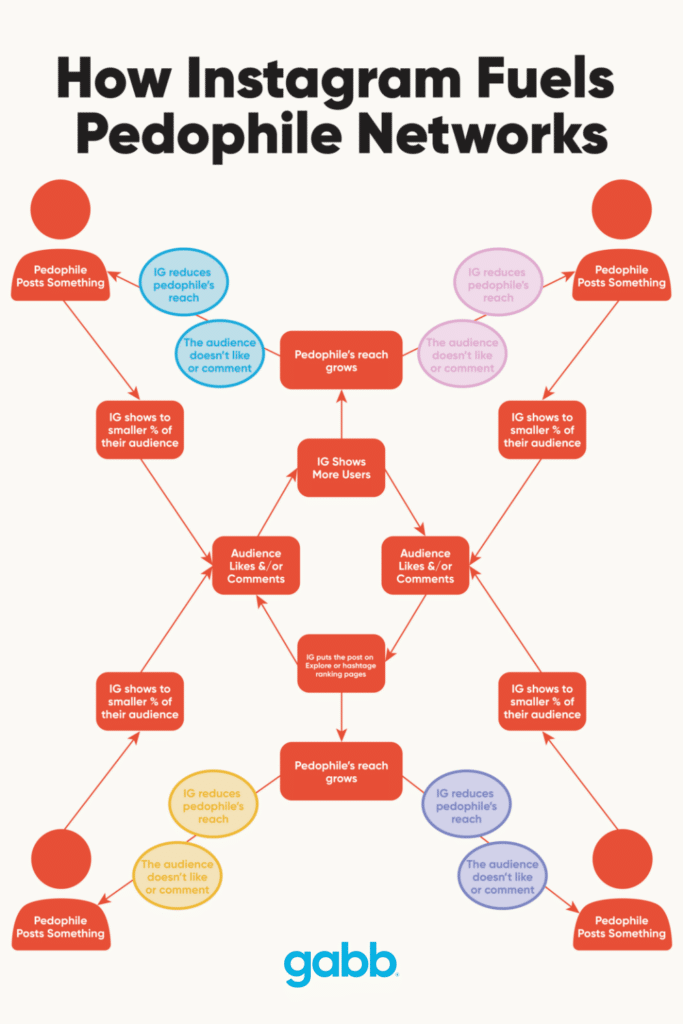

Instagram’s algorithm promotes abuse and connects criminals

Instagram’s algorithm works by delivering content to the user based on their previous interactions, views, and likes. Its purpose is to provide the user with a personalized experience by recommending content that will keep them on the app.

Meta has deflected blame by saying their internal statistics show less than one in ten thousand posted views contain child exploitation. Per law, Instagram reported over five million images containing child pornography in 2022 to The National Center for Missing and Exploited Children. Even one image exploiting children is one too many.

Researchers created dummy accounts that searched for child-sex abuse content. Immediately after viewing one of these accounts, the platform inundated these test accounts with “suggested for you” recommendations of possible childhood pornography sellers and buyers. Many of the recommended accounts contained links to content sites outside of Instagram.

The algorithm actively connects and guides pedophiles to criminal content sellers through these recommendations by linking users with similar niche interests. These seller accounts openly advertise child sex abuse material for sale and often claim to be run by the children themselves.

Instagram enables users to search explicit hashtags (such as #pedowhore and #preteensex), all related to underage sex and connects them with accounts offering illicit content.

Meta is Aware

Meta has acknowledged the problems within its enforcement operations and stated that it has established an internal task force to address these issues. The company has taken down 27 pedophile networks in the past two years and has blocked thousands of hashtags that sexualize children.

The study found that Meta’s automated screening for existing child exploitation content can’t detect new images or advertisements for content selling. The platform’s efforts to fight the spread of child pornography primarily focus on known images and videos that are already in circulation.

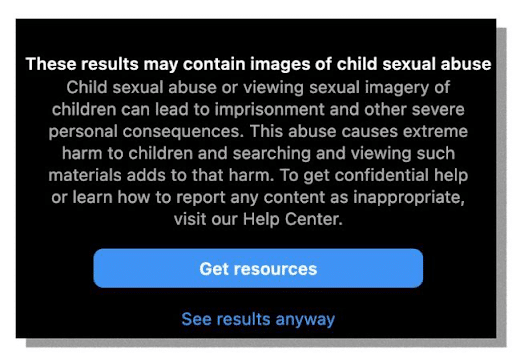

Prior to the publication of The Wall Street Journal study, Instagram allowed users to search for hashtags featuring illegal activities. There were also instances when a popup would warn the user the content they were about to view might contain “images of child sexual abuse” but still gave the user the option to “see results anyway.” That option has since been disabled but Meta did not offer a statement when The Wall Street Journal asked why it was allowed in the first place.

Are These Predators Punished?

Meta’s penalties for violating community standards are generally imposed on accounts rather than users or devices, making it easy for users to evade enforcement by running multiple accounts.

We don’t pretend this is an easy problem for Meta to solve but we share the Surgeon General’s stance that the burden for protecting our children should not fall on parents and children alone. In his words, “There are actions technology companies can take to make their platforms safer for children and adolescents.”

As for legal punishment, it’s a complicated and lengthy road. Law enforcement frequently utilize forensic software such as the Child Protection System to scour file-sharing networks and chat rooms, aiming to identify computers engaged in downloading explicit photos and videos portraying the sexual exploitation of children aged 12 and below. Officers flag these images and generate a unique digital identifier (referred to as a “hash”) for each file, enabling the tracking of individuals who download or view the content.

55% of those who consume child sexual abuse material admit to abusing children.

—Forensic psychologist, Michael Seto

The data revealed by the software is insufficient to lead to an arrest, but it serves as crucial evidence for establishing probable cause in obtaining a search warrant. Once authorized, law enforcement officers can confiscate and examine devices to determine if they contain illicit images. Generally, a larger assortment of such content is stored on computers and hard drives compared to what is shared online.

How to Help

Human trafficking is pervasive, with fifteen to twenty thousand people trafficked each year in the U.S. It’s a complex and destructive problem that can seem impossible to confront. But with combined small efforts, big changes are possible.

Children, especially those who are most vulnerable, need adults who will step up and protect them. Parents can start by learning the signs and how to report them through the US Blue Campaign. We can contact these companies or local governments and voice our concerns about the lack of safety, and demand they take action.

Parents might also consider spreading awareness or support for nonprofit organizations such as Saprea.org who provide support for survivors of sexual abuse.

The simplest, and most effective step, is to talk to kids about the dangers of social media and encourage them to delay or avoid creating accounts.

If a child isn’t ready to handle the risks of social media, don’t chance it. There are good options available now that allow kids to take early steps into technology without throwing them in the deep end.

They may face peer pressure to get the latest smartphone and be on the latest social network. And as parents, we often feel the pressure too. But as this recent investigation shows, the risks are just too great to give in and simply hope our child isn’t the one who is targeted next.

Learn more about social media free smartphones and safe devices for kids.

How will you stand up for our kids? Share your ideas in the comments.

Sound Seting on Sep 13, 2023 07:32 PM

Instagram's involvement in child exploitation is deeply disturbing. It's time for social media platforms to prioritize the safety of their young users. Instagram must take swift action to prevent, detect, and report any instances of child exploitation. This means stronger content moderation, cooperation with law enforcement, and better safeguards for vulnerable users. Children's safety comes first, and it's crucial for Instagram and other platforms to address this issue and create a safer digital environment for everyone.

Gabb on Sep 22, 2023 11:38 AM

Thanks for sharing!